The Bing/ChatGPT Deal is a Sign of Failure

The other day I saw a post on Reddit about Microsoft integrating OpenAI's ChatGPT into Bing.

This comes from an article in The Information by Amir Efrati.

The Reddit user asked if this was going to be a game changer for Bing. In the most glib way possible I responded:

How is this substantially different from rich results? It's not.

While it is not clear exactly what it means to integrate ChatGPT into Bing, The Information article suggests that the generative AI could summarize the results query, making search results more user friendly.

Microsoft's Failure

These stories say far more about Microsoft's failure than how it is a threat to Google. Why is Microsoft going to some outside company to get this technology?

I am struck (and maybe I shouldn't be) by how certain parts of the tech press have fallen all over themselves talking up how this is the end of Google. How this big move shows how far Google is behind. There have been several stories about how the success of ChatGPT have set off alarm bells at Google.

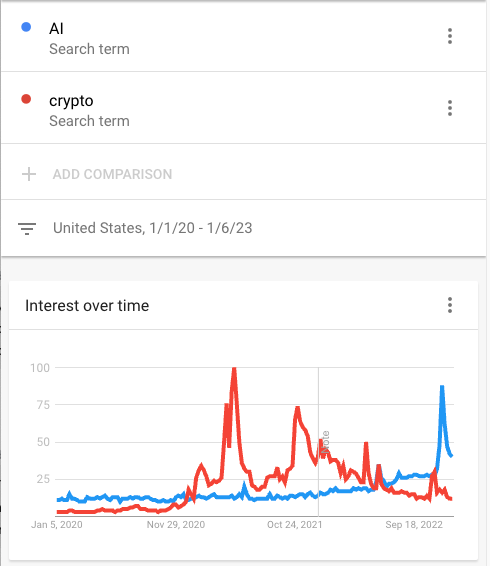

As someone who has spent nearly 15 years thinking about search and Google professionally, I call bullshit. We know Google is spending billions of dollars a year on AI research. For a certain type of person, integrating trendy generative AI tool to Bing is an a priori success for Microsoft. We don't even need to fully understand what such an integration would look like before we pronounce Google Search dead. But also...it seems kind as strange. Suddenly a certain a type of person is spending a lot of time talking about the AI revolution. Does it remind you of something?

Oh...I see.

I think there two things going on here:

A willingness to engage in a bad faith discourse about what this means for users.

See above chart. This is useful idiot nonsense. We are seeing VCs and their favored media outlets talking up trendy startups with little care about how this will or will not effect users. The best example of this is the years-long gold rush of voice search.

I am going to save Silicon Valley pump and dump nonsense for people who have a better handle on the business part. Instead, let's think about the issues with generative AI and how it will actual effect search.

Is Google already a chatbot?

Yes.

Like my snarky Reddit comment says, how are the rich results substantially different from asking a chatbot to summarize a topic?

Think about it like this: in both web search and AI chatbots you start with a blank text box. The thing you put into that text box is an implied question. The results of both are answers to that question.

With Google, you get a summary of the query in the form of rich results, some ads, and the 10 webpages Google thinks answer the query. ChatGPT, on the other hand, provides users with a prose response to their inputs. This response can then be refined through additional queries. But there are many limitations to ChatGTP; it lacks real time information and cannot cite sources. If users want to go deeper, there are links to the underlying websites. Bing would need provide a solution to this.

So really want we are talking about is a Bing using generative AI to write summaries instead of, in addition to rich results. Bing is making a UX decision that assumes that users will get more out of prose summaries that rich snippets drawn from original sources. This is not the worst idea, but I a have a hard time seeing how a prose summary-generated AI helps users. There is pretty good evidence that results reduce click through rates. Bing has had about 8% market share for a long while. They could probably get away adding a summary. But publishers would riot if Google started using an AI to create a descriptive summaries of a query. Publishers already hate rich results. But at least those models have links to the underlying results.

The Actual Problem with Adding Generative AI Summaries to Search Results (and Generative AI in General)

But there is a bigger issue. ChatGTP in particular, but generative AI in general, doesn't know if it is right or wrong. It has no concept of true or false. Users are going to see the prose response to critical queries and take them at face value. I am not sure this is a problem that can be solved through UX design. We only need to look back at the last 10 years to see how misinformation can spread online. Microsoft would likely need to limit the kinds of searches where AI generated summaries would appear.

This is why Google has not released their own version of ChatGPT and why they have stuck to focusing on improving rich search results. There is a real risk to both Google and their users. Google's own search quality guidelines outline how topics that affect users health and livelihood are given extra scrutiny. They call these "Your Money Your Life (YMYL) topics. These "topics can directly and significantly impact people’s health, financial stability or safety, or the welfare or well-being of society...Pages on clear YMYL topics require the most scrutiny for Page Quality rating." This is a flag for google as much as it is for publishers that rely on google for traffic. They understand that giving false information can be harmful. The risks of allowing an AI chatbot to generate a prose summary are simply too high, too unknown to be deployed without significant guardrails.

This is the mistake I think Microsoft and Bing are about to discover. To this point, generative AI has been a novel web toy. We are starting to see some interesting use cases for professionals. But it is still the very early days. But Microsoft rushing ahead shows that they have not really thought through its implications. It demonstrates what we have known for a long time. Microsoft's search product is a failure.